Nvidia recently unveiled its next-generation Vera Rubin AI platform during CES 2026, positioning it as a comprehensive solution to address the skyrocketing computational demands of advanced artificial intelligence systems.

The Vera Rubin AI platform, named after the astronomer Vera Florence Cooper Rubin, ushers in a significant architectural evolution, as it introduces six custom-engineered chips designed to work together as a unified AI supercomputing system.

This development comes amid a critical moment in the industry, where models are growing exponentially larger, becoming more reasoning-intensive as each day goes by, and demanding massive infrastructure that can handle trillions of parameters.

Vera Rubin AI Platform: Architecture and Key Components

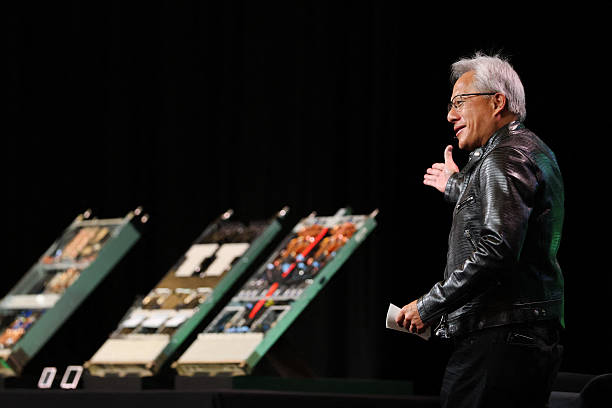

The platform addresses the computational explosion required for next-generation reasoning models. Nvidia CEO Jensen Huang at the CES 2026 emphasized that advanced AI reasoning approaches require approximately 100 times more computational power than previous iterations.

It is why Vera Rubin was built as a replacement of the famous Blackwell architecture to deliver dramatic efficiency gains such as sparse activation that allows for higher capacity training of mixture-of-experts (MoE) models.

For organizations, this may be a life-saver as it translates to direct operational savings and the ability to reallocate surplus GPU capacity to alternative workloads.

At the heart of Vera Rubin sits the Rubin GPU, Nvidia’s most advanced graphics processor yet. Built on a 3-nanometer process with 336 billion transistors, it packs a third-generation Transformer Engine optimized for inference workloads.

This delivers 50 petaflops of NVFP4 compute, allowing the platform to operate five times the performance of the previous Blackwell GPU on inference tasks and three and a half times faster on model-training tasks, while using less power through hardware-accelerated adaptive compression.

Additionally, Nvidia introduces specialized Inference Context Memory Storage with the Vera Rubin AI platform to manage the massive data volumes produced by trillion-parameter models and multi-step reasoning systems.

This introduction addresses the pain point where large language models (LLMs) require persistent memory for maintaining conversation context and reasoning chains, which is very essential for agentic AI applications.

What Vera Rubin Does for the AI Industry

What sets Nvidia’s Vera Rubin AI platform apart is its continuous rethinking towards upgrading AI infrastructure to specifically meet rising demands. An example is the introduction of the Inference Context Memory Storage as a solution to the struggles that current systems face with conversation history or reasoning chains demanding terabytes of fast storage.

This move further reinforces Nvidia’s dominance in AI infrastructure as the company continues to maintain its regular annual product release cadence and lead the next AI compute wave, making it increasingly challenging for competitors to close the performance gap.

Per what was announced in CES 2026, Vera Rubin entered full production as of January 2026, with first products expected to reach major cloud providers like Anthropic, OpenAI, and Amazon in the second half of 2026.

“Vera Rubin is designed to address this fundamental challenge that we have: The amount of computation necessary for AI is skyrocketing.” Huang told the audience of CES 2026. “Today, I can tell you that Vera Rubin is in full production.”