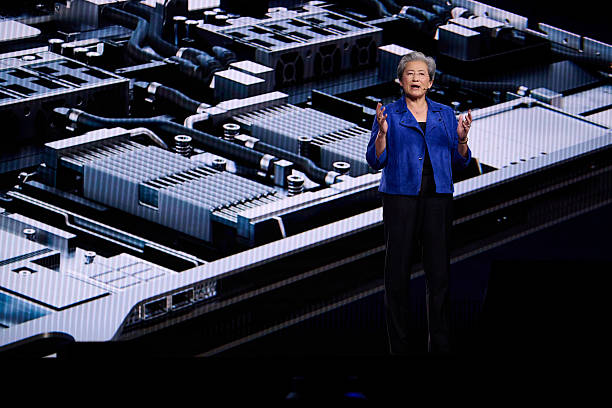

Advanced Micro Devices (AMD) took to the CES 2026 stage to reveal a broad lineup of AI-optimized processors and platforms, from consumer laptops/PCs to data centers.

The lineup, delivered by CEO Lisa Su, included the new Ryzen AI 400 Series, Instinct MI455X GPUs, and developer tools like Ryzen AI Halo, which were all designed to deliver superior performance in AI workloads.

As such, these AI products directly position AMD as a direct rival to Nvidia’s stronghold in building AI hardware, especially with the company’s recent announcement of its Vera Rubin AI platform.

AMD’s Ryzen AI 400 Series processors lead the charge for PCs, offering up to 12 Zen 5 cores, Radeon 890M graphics, and second generation XDNA 2 NPUs hitting 60 TOPS, which exceeds Microsoft’s requirements for on-device AI while promising multi-day battery life in thin laptops.

Additionally, Enterprise users get to use the model, as it offers PRO technologies for security and manageability and ensures consistent AI features across boards.

How AMD is Different from Nvidia

While Nvidia may dominate with CUDA-locked GPUs, AMD counters with open ROCm, cost-effective full-stack silicon (CPU+GPU+NPU), and x86 compatibility.

Client chips like RyzenAI also threaten Nvidia’s discrete GPUs in power efficiency for PCs, while Helios offers on-premise scale and not necessarily with Nvidia’s ecosystem lock-in.

Due to this advantage, industry analysts view AMD’s on-premises focus as appealing to businesses that are price-sensitive and looking for Nvidia alternatives.

Both launches by AMD and Nvidia further democratize AI, as it helps with the wide industry development of AI compute going from cloud to edge AI – a shift of AI processing from centralized cloud data centers to local devices and servers closer to where data is generated.

This hybrid process or approach includes benefits such as reduced latency, enhanced data privacy and security, scalability, lower bandwidth and cost, as well as improved reliability.

However, AMD’s new high-performance AI chips only remain as a challenge as there is still the industry consensus of Nvidia’s offerings being better. Still, the company’s introduction of scalable and energy-efficient alternatives to Nvidia’s products helps foster competition that could lower costs and further diversify the AI compute landscape.

For instance, the Helios rack-scale platform, delivering up to 3 exaflops per rack with MI455X GPUs and EPYC Venice CPUs, targets hyperscale data centers that are strained by surging demand. These data centers are projected to need 100x more global compute by the end of the decade.

In other words, where Nvidia leads with providing high-end AI hardware in the name of Blackwell and Rubin GPUs, AMD offering high-performance and full-stack (CPU+GPU+NPU+ networking) helps with hybrid AI workloads that combine different AI methods to balance performance, cost, and control.