Nvidia has released Alpamayo, a family of open-source AI models designed to equip autonomous vehicles and robots with human-like reasoning that they can articulate aloud.

Announced at CES 2026, the family includes the flagship 10-billion-parameter Alpamayo 1 vision-language action (VLA) model, along with simulation tools and massive datasets, which are all available for free non-commercial use on Hugging Face.

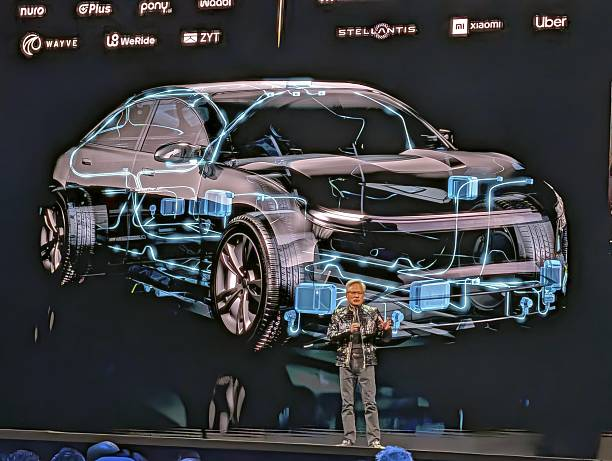

Put simply, Alpamayo is designed to give autonomous vehicles the ability to reason through complex driving scenarios and explain their decisions in plain language. The announcement at CES 2026 introduces what CEO Jensen Huang calls “the world’s first thinking, reasoning autonomous vehicle AI,” which is a system that doesn’t just react to patterns but actively thinks through problems step by step, much like a human driver would when encountering an unexpected situation.

Alpamayo 1, a 10-billion-parameter VLA model that processes camera feeds, generates driving trajectories, and provides reasoning traces that explain the logic behind each decision. For example, when navigating a construction zone the system might output “nudge to the left to increase clearance from the construction cones” along with its planned path.

This transparency is a departure from the “black box” approach that has characterized autonomous driving systems for years, where the AI’s decision-making process remained non-transparent even to developers.

Breaking Down the “Think Out Loud” Technology

Alpamayo operates differently from conventional autonomous driving systems by employing chain-of-thought reasoning. Rather than simply matching visual patterns to predetermined responses, the AI model breaks down complex problems into smaller steps, evaluates cause and effect, and selects the safest path forward. According to Ali Kani, Nvidia’s vice president of automotive, the system breaks down “problems into steps, reasoning through every possibility, and then selecting the safest path before making a decision.”

Touted as the “ChatGPT moment for physical AI” by Huang, its reasoning capability extends to scenarios the AI has never encountered during training. Alpamayo’s system also integrates multiple layers of analysis that include real-time sensor processing from cameras, lidar, and radar, contextual understanding of traffic signs and road conditions, predictive modeling of potential scenarios, and most importantly, explanations for why specific actions are being taken.

This multimodal approach allows the AI to perceive its environment through vision, understand context through language models, and execute precise physical actions through vehicle controls.

Nvidia’s Open-Source Approach with Alpamayo

Nvidia’s decision to release Alpamayo as open-source represents a strategic departure from the proprietary approach favored by competitors like Tesla. The complete package, with the aim to accelerate the development of autonomous vehicle, includes the Alpamayo 1 model weights available on Hugging Face, the AlpaSim simulation framework on GitHub, and over 1,700 hours of diverse driving data covering rare and complex real-world scenarios across different geographies and weather conditions.

The simulation framework addresses one of autonomous vehicle development’s biggest bottlenecks which is the inability to safely test edge cases at scale. AlpaSim recreates real-world driving conditions with realistic sensor modeling and configurable traffic dynamics, allowing teams to validate their systems before deploying them on actual streets.

Several major players have already committed to the platform. For example, Mercedes-Benz announced that its all-new CLA sedan will feature Alpamayo technology, marking the first production passenger vehicle to use AI-powered chain-of-thought reasoning.

The Safety Question

The shift from pattern-matching to reasoning-based systems introduces new concerns on safety and liability. When an AI system is touted to have the capacity to “think” rather than simply “follow a script,” determining responsibility for errors becomes more complex.

However, Nvidia has implemented what it calls the Halos safety system, a classical, rule-based backup layer that can override the AI if it proposes a dangerous trajectory.

Why This Matters and What Comes Next After Alpamayo

The autonomous vehicle market is projected to see explosive growth over the coming years. Goldman Sachs estimates the North American robotaxi ride share market could grow at approximately 90% annually from 2025 to 2030, potentially pushing U.S. gross profits in the sector to around $3.5 billion. Alpamayo positions Nvidia both as a chip supplier and as a provider of the intelligence layer that powers autonomous systems.

Additionally, Alpamayo’s VLA architecture applies beyond vehicles to humanoid robots and industrial automation, as it is capable of powering navigation that reasons aloud for tasks like obstacle avoidance or multi-agent coordination. This positions Nvidia at the forefront of agentic AI for enterprise systems.

For now, attention is on whether Alpamayo’s open-source approach will allow the tech giant maintain its first position in deliverling applicable AI.